Markov chain

A Markov chain is a model of some random process that happens over time. Markov chains are called that because they follow a rule called the Markov property. The Markov property says that whatever happens next in a process only depends on how it is right now (the state). It doesn't have a "memory" of how it was before. It is helpful to think of a Markov chain as evolving through discrete steps in time, although the "step" doesn't need to have anything to do with time.

Markov chains can be discrete or continuous. Discrete Time Markov Chains are split up into discrete time steps, like t = 1, t = 2, t = 3, and so on. The probability that a chain will go from one state to another state depends only on the state that it's in right now. Continuous Time Markov Chains are chains where the time spent in each state is a real number. The amount of time the chain stays in a certain state is randomly picked from an exponential distribution, which basically means there's an average time a chain will stay in some state, plus or minus some random variation.

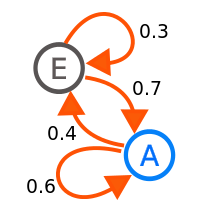

An example of a Markov chain are the dietary habits of a creature who only eats grapes, cheese or lettuce, and whose dietary habits conform to the following (artificial) rules:

- It eats exactly once a day.

- If it ate cheese yesterday, it will eat lettuce or grapes today with equal probability for each, and zero chance of eating cheese.

- If it ate grapes yesterday, it will eat grapes today with probability 1/10, cheese with probability 4/10 and lettuce with probability 5/10.

- Finally, if it ate lettuce yesterday, it won't eat it again today, but will eat grapes with probability 4/10 or cheese with probability 6/10.

This creature's eating habits can be modeled with a Markov chain since its choice depends on what it ate yesterday, not additionally on what it ate 2 or 3 (or 4, etc...) days ago. One statistical property one could calculate is the expected percentage of the time the creature will eat cheese over a long period.

-

Classical RAND Markov model of cocaine use.

Another classical example of a Markov chain is the 1995 model of cocaine use in Los Angeles designed by the RAND Corporation.[1] The model is governed by a series of equations, which describe the probability of a person being a non-user, light user (L) or heavy user (H) of cocaine at time t+1, given their prior probabilities at time t:

L(t+1) = I(t)-aL(t) +fH(t)-bL(t)

H(t+1) = bL(t)-fH(t)-gH(t)

I(t)+L(t)+H(t)=1

References

change- ↑ Sohler Everingham, Susan M.; Peter Rydell, C.; Caulkins, Jonathan P. (December 1995). "Cocaine consumption in the United States: Estimating past trends and future scenarios". Socio-Economic Planning Sciences. 29 (4): 305–314. doi:10.1016/0038-0121(95)00018-6. ISSN 0038-0121.